In the press conference to announce to an unsurprised world that 2023 was the warmest year on record, Gavin Schmidt noted that expressing that temperature relative to a pre-industrial baseline introduced a large uncertainty. That uncertainty has a lot to do with sea-surface temperatures. This statement has since become a news article in Science. It’s well worth a read as it gets things mostly right (the opening paragraph should be zapped though) and quotes most of the right people, i.e. the people actually working on this topic rather than usual faces. A sad fact is that it namechecks almost everyone currently working on the problem. As Elizabeth Kent says it best “For the importance of these data sets, the number of people working on it is just unimaginably small.”

Some time ago1, I organised a meeting to discuss systematic errors in historical SST measurements. I think we maxed out at 25 people in the room with 2 more joining remotely. That included a few people working on climate data but without a specific interest in SST, a statistician with a general interest in climate data, and others from the Met Office who were simply interested in SST data2, but not actually working in the area. The core of around 15 people people were authors on a paper that came out of the workshop: A Call for New Approaches to Quantifying Biases in Observations of Sea Surface Temperature but even then, they weren’t all working on the topic. Since the publication of the paper, the number has fluctuated downwards. People have retired or moved on. The problems, however, remain.

At the same time, a huge amount of interesting work has been done. The people who were active, have been very active. The HOSTACE project run from NOC in Southampton produced a series of extremely interesting papers characterising the diurnal cycle of SST, reconstructing ship voyages from unlabelled observations, lab testing bucket models, inferring measurement methods from data characteristics (including the diurnal cycle), as well as underpinning work on the database, ICOADS, that is the foundation on which all historical SST research is ultimately based. There was more besides.

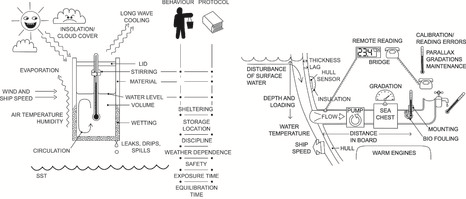

The import of these for global temperature might not seem obvious. Understanding buckets is important because they were used to make most of the measurements in the early record. Canvas buckets can lose heat during hauling. Some modern rubber buckets might be susceptible to solar heating. Lab work helped to characterise the systematic errors3 and test the models and assumptions used to correct for them and suggests some strategies for a more flexible approach to the corrections. Reconstructing ship voyages is important because each ship is different. Keeping track of which ships made which measurements is vital for getting the uncertainties right4 which in turn affects how you interpolate the data and compensate for gaps in coverage. Inferring measurement method is important because that information is very often missing from ICOADS (or just wrongly recorded) and is vital for understanding the data.

More recently, a group at Harvard, principally Duo Chan, produced a series of interesting papers that identified correlated errors at the level of collections of data known as “decks”5 and individual countries. Previously, errors were considered at the level of individual observations, individual ships, and by measurement method. The large scale correlated errors associated with decks and countries implies that uncertainty is underestimated to a significant degree, but it’s unclear whether this matters globally or its effect is largely regional. Duo’s work also extends the characterisation of measurement methods and looks in detail at the Second World War (providing good evidence of what was long suspected: that the period is too warm relative to years before and after), estimating uncertainty associated with reported positions in the 19th Century, and comparing SSTs to land temperatures to assess the long-term trends.

There has also been a large amount of newly digitised data coming from citizen science activities such as Old Weather. Digitised data can help to fill gaps in the maps, which are large in the nineteenth century (though not as large as you might expect) and at other times and places: the world wars, the Arctic and Antarctic, for example. In addition, newly digitised data is likely to have more complete meteorological reports and better metadata, both of which are helpful for understanding measurement errors. On the other hand, as mentioned in the article, a “staggering number of log books have yet to be digitized”. Estimates vary, but there might be hundreds of millions, if not billions, of reports sitting in libraries and archives.

Lots of these new results, the understanding that comes with them, and many of the newly6 digitized data have yet to feed into global temperature data sets. It takes time7 and, in some cases, the way that the datasets are built makes it harder. There’s no existing framework that could easily incorporate the methodology employed by Duo Chan, for example. An holistic approach would involve building a new dataset from the ground up. This is not easy.

I put together a prototype version of HadSST which incorporated a couple of these updates, principally: deck level correlated errors (replicating Duo’s results via a completely different method) and the Second World War bias (which is handled separately in HadSST), as these fit neatly within the HadSST4/HadISST2 framework. It was also set up to use ship tracking information to further improve the error covariances. However, the current version of HadSST doesn’t include these. The article promises a new version of ERSST, v6, that I’m very excited to see. There are few other datasets. The JMA data set COBE-SST-2 is a generation or so behind the current versions of ERSST and HadSST, and hasn’t been updated in nearly a decade. There is an historical SST data set produced by CMA, but it is heavily based on ERSST (so it’s not independent) and only starts in 1900 (so doesn’t tell us anything new about the “pre-industrial” period). Aside from the slow feed through from research into operations, this is a surprising lack of diversity. Just two SST datasets (HadSST and ERSST) provide the ocean component of all the current regularly updated global temperature series that extend back to the late 19th century, to wit: HadCRUT5, Berkeley Earth, GISTEMP, NOAAGlobalTemp, and Kadow et al.. It even holds for those that aren’t being updated e.g.: Vaccaro et al., Ilyas et al., CMST. For ocean heat content, there are about 17 different datasets, for ice sheet mass balance (across Greenland and Antarctica) there are around 50.

The process of getting observations into the archives that people actually use has long been a bottleneck in the process. Most long SST datasets use ICOADS, a US data set which underpins not just SST datasets but also features in a wide range of other products including reanalyses. Given its importance, it has long been severely under-resourced. An European alternative via Copernicus is being developed, but isn’t yet operational or as complete as ICOADS. The data banks that underlie both ICOADS and the Copernicus effort are formidably messy8. The data banks contain numerous duplicated reports sometimes in different states of completion, with various idiosyncratic processing decisions, some degree of scrambling9, and a wealth of metadata that is not always easily organised.

The other thing to bear in mind when considering the uncertainties in historical global SST records is the stuff that appears in the “discussion” sections of papers and theses that never got written up as a paper10 and buried in “supplementary info“, but not in the headline conclusions. Some of this is detailed information that is less likely to impact global temperatures – improving on generic error estimates, for example – but others are perhaps more consequential. The article mentions that ERSST uses air temperatures to establish their bias adjustments. HadSST does too prior to 1940. What it doesn’t mention is the latest versions of the marine air temperature datasets do not go back to 1850 because of large, hard-to-assess biases in the earliest part of the record. CLASSNMAT, for example, starts in 1880. Work is ongoing to extend these records back to 1850 and beyond via the GloSAT project but that dataset does not yet exist. What this means for SST datasets using air temperatures before 1880 is an open question. There are indications that global temperatures are too high in this period – based on the work of Duo comparing to coastal stations, and Qingxiang Li as well as an earlier paper by Cowtan and co. – and also too cool just afterwards because of potential errors in the SSTs. The warm early period is consistent with the idea that unlabelled obs in the earliest part of the record were made in the middle of the day so both air temperature and sea surface temperature were biased warmer than assumed. A possible consequence of that is that all estimates of global temperature exhibit too little warming relative to pre-industrial.

Even stuff that is in the abstracts is often overlooked. As you look back in time, the first large difference between ERSST and HadSST is in the early 1990s. That’s when the interesting uncertainties really start. It just gets more interesting the further you go.

There are also extraordinary gaps in our understanding of marine temperatures. There are no reliable measurements of diurnal temperature range over the vast majority of the ocean. Both ships and commonly used buoys (e.g. in the Pacific TAO array) have biases associated with solar heating of the ship, sensor or enclosure. In the modern period, there are very few marine air temperature measurements. Night-time ship observations are available, but in decreasing numbers.

Finally, the article assumes that these improvements in data and understanding will reduce uncertainty in long-term change. They might. On the other hand, they might not. The 1.3 to 1.5C range quoted is probably an underestimate. As Robert Rohde noted, “They can’t both be right” but what’s more concerning is that perhaps neither one is. Either way these improvements will certainly help us to better understand the uncertainty, but the effect of that isn’t guaranteed. Such is research. You never know what you’re going to get. We might not be as far from 1.5°C as we thought.

-fin-

- Around 2016. Probably. ↩︎

- Or just wanted a change of scenery. ↩︎

- Stirring matters. ↩︎

- The HadSST4 dataset underestimates some components of uncertainty because this information wasn’t available. The uncertainties are coded using an error covariance matrix. In most applications, errors are assumed to be uncorrelated, which gives a purely diagonal covariance. When errors correlate, you get off-diagonal elements, that connect locations visited by observations that share an error e.g. all grid cells visited by a particular ship, or, in the case of Duo’s work any grid cell visited by a ship from a particular deck. The larger the off-diagonal components and the more of the matrix they cover – the stronger and more extensive the correlations – the larger the uncertainty in the global mean (in general). ↩︎

- After the decks of punched cards on which the data were recorded. ↩︎

- It can take a long time for digitized data to reach the archives used for global data sets. ↩︎

- Interpolation of the HadCRUT dataset (in HadCRUT5) took some years after the publication of Cowtan and Way’s paper showing the systematic effect of interpolation related to the polar hole. ↩︎

- The people who work with it directly can spend hours at conferences swapping stories about the madness that lies therein. ↩︎

- Deck 732 is an enduring mystery. ↩︎

- For example, the discovery by Giulia Carrela that a subset of ships have engine room biases that correlate with the actual SST, usually being larger when the water was colder. ↩︎

Leave a comment